In this blog post, I reflect on my experiences from conducting a grounded theory study as a software engineering researcher in summer 2010. In the study, Peggy and I examined the role of a community portal, such as IBM’s Jazz or Microsoft’s MSDN, in the process of communicating software development knowledge. We just presented the results of the study at ESEC/FSE in September 2011 (paper pre-print). This is far from the first blog post on experiences using grounded theory. To read about other researchers’ experiences, you might want to take a look at L. Lennie Irvin’s collection of blog posts on grounded theory or the 2008 CASCON paper by Steve Adolph from UBC.

In this blog post, I reflect on my experiences from conducting a grounded theory study as a software engineering researcher in summer 2010. In the study, Peggy and I examined the role of a community portal, such as IBM’s Jazz or Microsoft’s MSDN, in the process of communicating software development knowledge. We just presented the results of the study at ESEC/FSE in September 2011 (paper pre-print). This is far from the first blog post on experiences using grounded theory. To read about other researchers’ experiences, you might want to take a look at L. Lennie Irvin’s collection of blog posts on grounded theory or the 2008 CASCON paper by Steve Adolph from UBC.

The Corbin / Strauss approach

Grounded theory is a systematic methodology to generate theory from data. The methodology originates from the Social Sciences and aims at studying social phenomena. There are different stances on how grounded theory should be carried out, most notably the positivist approach described by Anselm Strauss, and the more interpretative view that is for example described by Kathy Charmaz.

In our study, we followed the grounded theory approach as described by Juliet Corbin and Anselm Strauss in the Qualitative Sociology journal. They specify eleven procedures and canons that grounded theory researchers as well as the readers and evaluators of grounded theory studies should be familiar with:

- Data collection and analysis are interrelated processes. When grounded theory is used, data analysis begins as soon as the first bit of data is collected.

- Concepts are the basic units of analysis. Incidents from various data sources (in our case: interview transcripts, documentation artifacts, and ethnographic field notes) are given “conceptual labels”. The focus is on concepts that “earn their way into the theory by being present repeatedly”.

- Categories must be developed and related. Categories are more abstract than labels and can explain relationships between concepts. A category must be developed in terms of its properties, dimensions, conditions and consequences.

- Sampling in grounded theory proceeds on theoretical grounds. Sampling in grounded theory focuses on “incidents, events and happenings” (in our case: all incidents that were related to the creation or use of artifacts posted on a community portal).

- Analysis makes use of constant comparisons. When a new incident is noted, it has to be compared against other incidents for similarities and differences.

- Patterns and variations must be accounted for. Data must be examined for regularity as well as for irregularities.

- Process must be built into the theory. Grounded theory is about understanding processes.

- Writing theoretical memos is an integral part of doing grounded theory. To make sure that no concepts or categories are forgotten, memos have to be written throughout the course of the study.

- Hypotheses about relationships among categories should be developed and verified as much as possible during the research process. Hypotheses are constantly revised until they hold true for all of the evidence gathered in the study.

- A grounded theorist need not work alone. Concepts, categories and their relationships must be tested with other researchers.

- Broader structural conditions must be analyzed, however microscopic the research. A grounded theory study should specify how the microscopic perspective links with broader conditions (in our case: how does the particular community portal in our study compare to other portals?).

In grounded theory, coding is the fundamental process that researchers use to make sense of their data. Coding is done in three steps:

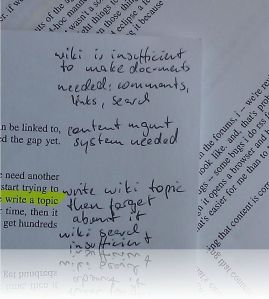

- Open: Data is annotated line by line (see picture above for an example from our study) and concepts are created when they are present repeatedly. Open coding is applied to all data collected (in our case: interview transcripts, documentation artifacts, and ethnographic field notes). Based on the concepts, more abstract categories are developed and related. Each category has properties, dimensions, conditions, and consequences.

- Axial: Data is put together in new ways by making explicit connections between categories and sub-categories.

- Selective: The core category is identified and systematically related to other categories.

Making grounded theory explicit

For qualitative researchers, many of the guidelines described by Corbin and Strauss are nothing new, and in fact, we found that we had implicitly followed several of them already in previous studies. For example, when conducting interviews, researchers tend to revise their questions in later interviews based on the answers given in the first interviews and data collection is rarely completely separate from data analysis. However, there was a lot of benefit in making this process explicit:

- We didn’t have to plan out every detail of our study beforehand. This is often a challenge in exploratory field research where researchers are not aware of all peculiarities of the setting they are about to conduct a study in. When using grounded theory, it is “officially” part of the research methodology that questions are refined over time, that not all interviewees are pre-determined, and that the resulting theme is unknown beforehand.

- Similarly, we were able to change direction during the study when we found interesting themes to follow-up on. Again, this is something that frequently happens in qualitative research, but grounded theory makes it explicit.

- Grounded theory focuses on concepts that become part of the theory because they are present in the data more than once. This makes it easier for researchers to focus on themes that are relevant in the study context rather than themes that only matter to the researcher.

- Especially during open coding, the use of grounded theory helps ignore pre-conceptions of how and why certain incidents occur. Going through interview scripts or ethnographic field notes on a line by line basis forces researchers to think about every aspect of the data collected.

- Grounded theory also allows researchers to consider everything they encounter during a study, such as anecdotes or water-cooler conversations. This is not possible with a pre-defined set of interviewees or data sources.

The emergence of the core category

Going into the grounded theory study, I was concerned that after all the open and axial coding, there would be no “core category” that emerged from the data, and in fact, it seems a bit like magic the way that it is conventionally described: “Sufficient coding will eventually lead to a clear perception of which category or conceptual label integrates the entire analysis.”

At least from our experience, I can say that we did encounter a core category that came out pretty clear at the end of the selective coding. One of the challenges is to abstract the core category to the right level. For example, in our case, we found several interesting differences between artifacts on a community portal such as blog posts, wiki pages, and technical articles. While not a single of these differences stood out, we identified the fact that artifacts are different along several dimensions as core category.

The role of research questions

We found the role of research questions tricky when using grounded theory as methodology. As Corbin and Strauss describe it, “each investigator enters the field with some questions or areas for observation, or will soon generate them. Data will be collected on these matters throughout the research endeavor, unless the questions prove, during analysis, to be irrelevant.”

Researchers have questions going into a study, but these questions are refined, changed, and altered throughout the study. This presents a challenge when reporting the research questions for a study. To be thorough, one would have to report the initial questions along with their iterations over the course of the study. As research papers aim at the dissemination of research results rather than a discussion of the research process itself, we found it more useful to report the final set of questions.

Lack of tool support

Coding of ethnographic field notes, interview transcripts and software artifacts is tedious. Several researchers have developed tools to help with that process, in particular by offering traceability between data and codes. Examples of such tools include Saturate, Qualyzer, Atlas, MaxQDA and WeftQDA.

Unfortunately, I found that with all these tools, attaching codes to data and relating codes to each other is hard to do on a computer. After trying several tools (after all, as a Computer Science student I’d like to believe that computers can solve complex editing and annotation tasks), I gave up, printed all the data in font size 8, and went back to using pen and paper. While the traceability is only achieved by following hand-written annotations, it felt a lot more natural to annotate data “by hand”. We need a metaphor better than a list of file names to support our cognition when several sheets of paper are involved.

Reporting a grounded theory study

It is challenging to write a paper describing a qualitative study, even when there is no grounded theory involved. Reporting the qualitative coding in sufficient detail so that other researchers can replicate the work would require giving all the instances of a code being applied to an artifact in a 10-page paper. In approaches such as grounded theory, the problem gets worse as codes would have to be considered at different levels of detail (i.e., open coding, axial coding, selective coding). Instead of including all these details in their papers, some researchers choose to host the details online. That is not possible in all research settings though. For example, researchers who have access to proprietary data are usually not allowed to make their data available online.

To provide at least some traceability to readers and reviewers, we assigned unique identifiers to each one of our interviewees and we also indicated the role of the interviewees in the identifier to add additional context without revealing confidential information (e.g., M1 for the first manager we interviewed, and D1 for the first developer). When quoting individuals in our paper, we referred to the interviewees using these identifiers. The right amount of quotes in a qualitative research paper is a question of style. Some researchers prefer many exemplary quotes to make the research more concrete, others prefer generalizations and therefore discourage the use of concrete quotes. We found it easier to tell the story in a paper using quotes — however, it is important to understand that these quotes are only meant to represent a much larger body of qualitative data.

In summary

Grounded theory is a great methodology to understand the “how” and “why” of a research problem. Making the coding process explicit and going through data on a line by line basis allows for new insights, and also ensures that no important themes are overlooked. While the coding and the reporting of results can be tedious, grounded theory should be in the toolbox of every researcher who tries to understand processes in software development and beyond.

PS – Thanks to Fernando Figueira Filho for proof-reading a draft version of this post!

Thanks for the interesting post. I have a question about the data gathering. It seems that if you don’t elicit some topic, then it won’t be a category, since categories emerge based on frequency. And maybe some things (in the study) are important but tacit – the unknown knowns. For example, let’s say that no one mentions the fact that you have to register with IBM in order to use Jazz. Does that mean the issue of online privacy never emerges? Or does this get developed more fully now there is a embryonic theory, when you return to do confirmatory studies?

Great question.

The short answer is that we collected data not only through interviews but also through a 3-month ethnographic study and through inspection of the artifacts in the community portal. The belief is that these tacit things would’ve come up somehow if they were important.

The long answer has something to do with the tricky role of research questions in grounded theory. Data collection methods should be driven by the initial research questions, and these questions should be refined before advancing to the next phase. So if the initial research questions don’t include a certain topic, chances are it won’t come up. However, if the topic is somewhat related to the initial questions, it will probably come up — and then questions can be refined to investigate the topic further.

As I’ve written in the post, we found the concept of research questions a bit tricky in this context. We started with a very general “What is the role of community portals?” and then translated that into “How and why do you contribute to the portal?” and “How and why do you read on the portal?” for the interviews. Out of the answers to those questions and lots of follow-up questions, the theory that we describe in the paper emerged.

Like your comments about software. It seems that quite often, the computer interface gets it the way of the researcher and slows him down. I am not familiar with all of them but Weft QDA has a pretty crude interface while Atlas.ti interface in convoluted and overly complex. NVivo interface seems cleaner but a simple task requires so many steps that it slows you down tremendously. MaxQDA is better, but the best one I have seen until now is QDA Miner. The main coding interface is very clean and allows for fast and efficient coding and memoing, while all the advanced stuff (and there are plenty of those) are accessible through menus. It has some limitations. For example, there is no theory building tools in QDA Miner for visually designing graphical representation of relationship between concepts. It has, however, some nice exploratory tools to identify some of those relationships.